Web Scraping with Puppeteer and some more useful JavaScript nuances

This image is the most apt description of puppeteer.[ Web scraping ]

Wait what? web scraping with puppeeter? but why?

The answer is: because it not only works, it’s useful! In this post we’ll learn how to leverage Puppeteer to perform Web-Scarping. Let’s get started.

What is Puppeteer

According to its official documentation:

Puppeteer is a Node library which provides a high-level API to control Chrome or Chromium over the DevTools Protocol. Puppeteer runs headless by default, but can be configured to run full (non-headless) Chrome or Chromium.

Okay so let’s break this line down bit by bit …

- Puppeteer is a Node library:- Puppeteer is a Node library .xD

- which provides a high-level API to control Chrome or Chromium over the DevTools Protocol. :- Now this has some meat…It explains that Puppeteer provides us with a function to access Chrome or Chromium . Which in turn means we can automate anything we do on these browsers with it like emulating a key press, a click etc .

- Puppeteer runs headless by default but can be configured to run full (non-headless) Chrome or Chromium. :- Now here comes the word headless (HOLDING DOWN MY PUNS). By headless, it means that the whole operation on the browser by puppeteer can be done without ANY GUI (Graphical User Interface) . Which means you won’t even see a window or tab opening buttons being clicked or anything, it all happens in the background. Although you can work with non-headless mode too so that you can see your script in action.

Prerequisites

This tutorial is beginner friendly, no advanced knowledge of code is required. If you’re following along you’ll need NodeJS installed, basic knowledge of the command line, knowledge of JavaScript and knowledge of the DOM.

All the code will be available in the given repository :

silent-lad/medium-webscraping

_Contribute to silent-lad/medium-webscraping development by creating an account on GitHub._github.com

Let’s Get Started

I’m gonna be making this a 2 step tutorial:

- Easy Step- It will make you familiar with puppeteer

- The First Jump- This will be our final objective where we will do some real web-scraping.

- Some extra stuff — to be covered in Part 2 of this article ..it contains scraping of a site with pagination etc.

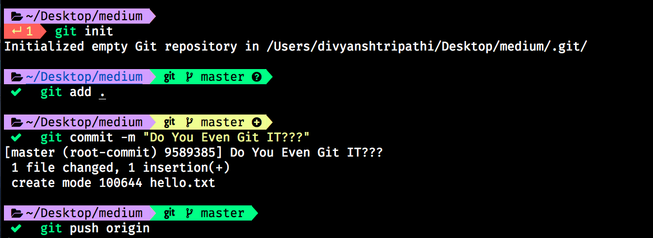

Project Setup

- Make a folder.

- Open the folder in your terminal / command prompt .

- In your terminal run,

npm init -yThis will generate apackage.jsonfor managing project dependencies. - Then run

npm install --save puppeteer chalkThis will install Chromium too which is of around 80mb so don’t be surprised by size of the package (no puns intended). Chalk is an npm package for colorful console messages you can skip it if you want to - Finally, open the folder in your code editor and create an

easy.jsfile.

Easy Steps

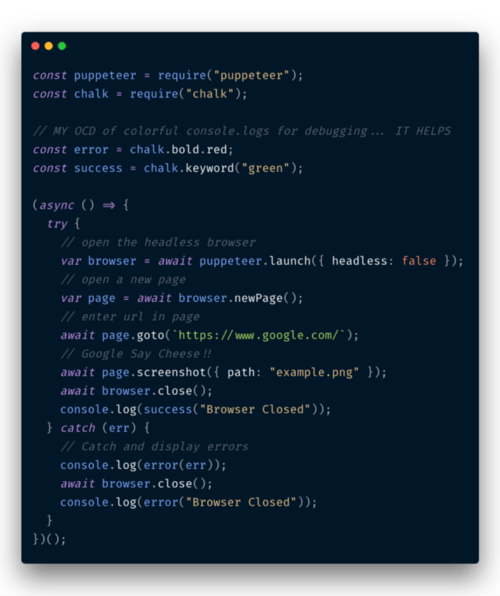

We’ll start with a short example where we’ll take a snapshot of www.google.com .YES we can take screenshots in puppeteer.

So what this example does is it uses async IIFE and wraps the whole script inside it . I’ve opened the browser in headless but i prefer you keep headless:true if you are a beginner.

And if you are struggling with code always remember the link to the repo is given above.

Now run this script with :-

node easy.js

If everything went well you would see an example.png in your folder and a green console log message saying “Browser Closed” , and always keep your script inside a try catch block as sometimes the script gets terminated due to an error and the headless browser window will run in background as it is not terminated.

The First Jump

Now you must be familiar with puppeteer and how it’s working so let’s jump onto THE REAL WEB SCRAPING.

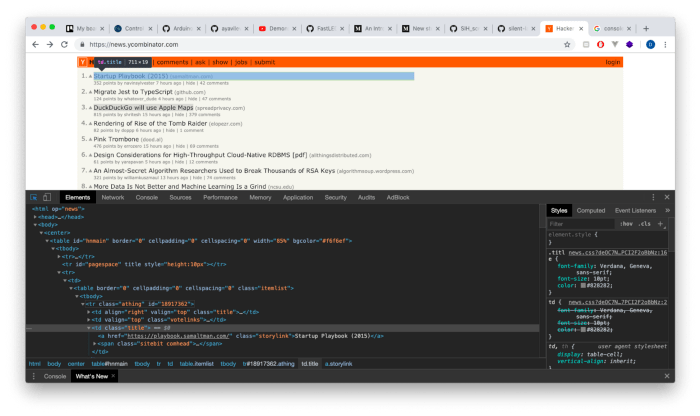

We’ll be scraping a renowned site among techies i.e https://news.ycombinator.com/ . We’ll be scraping the top ten articles’ headline and the link to those articles. I suggest you may visit this site first to see it what we are going to do .

Setup

The first step of web-scraping is to acquire the selectors. Remember when you learned CSS and there were selectors like .text , just like that we have to acquire selectors for the elements of the page we have to scrape. So I’ll be scraping the article heading ,the link to the full article and points given of the top ten articles on the first page.

Also make a new file giantLeap.js and start coding along .

To find the selector of your element press ctrl+shift+j (on Windows) or command+option+j(on Mac) and click on the little page inspector icon.

Here is a small example .you can see the page inspector highlighted in blue in the upper left corner of the console.

Now here’s the list of things we’ll extract from each article in the list and their selectors:-

- Title (a.storylink)

- Link to the article (a.storylink, but the link is stored in href attribute)

- Age of the article (span.age)

- Score given to it. (span.score)

All the given selectors are common to every article on the page so we will use document.querySelectorAll for selecting the. Refer to DOM interactions by javascript.

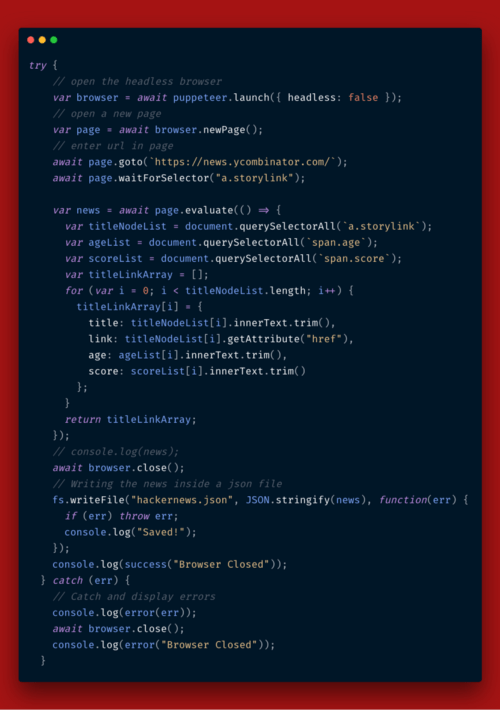

Page.evaluate

This function is used to enter the DOM of the given page and access it as if you were in the console of the browser. REMEMBER the code inside page.evaluate is performed inside the chromium browser so the variables declared outside it in the script are not accessible to the browser (i.e the code inside page.evaluate)

Getting The Information -

Here titleNodeList will get a list of nodes matching the selector(‘a.storylink’) .Thus getting all the titles.

In the for loop, we access each node and get its innerText(title) and href value (link) and return the value out of page.evaluate to store it in news.

TitleLinkArray is an array of objects where each object stores the information of an article.

Few things to notice:-

There are few things to notice in the above example

- The

**page.waitForSelector:-**While doing web scraping from a browser you have to wait for the page to be loaded. This function takes a selector as an argument and waits for the page to load until that selector is available to the browser. - For loop :- If you come from the world of functional JS you could’ve been inclined to use

maphere, but BEWARE the nodeList provided by querySelectorAll is not a proper javascript array thus you cannot usemap,reduce,flatetc on it.

Here The web scraping tutorial is almost complete now we only have to scrape the age and score of the articles in a similar fashion and store it either as a json or csv.

So here’s the complete code

If everything went well you would have a hackernews.json in your project folder. Thus you have successfully scraped HackerNews .

There are many things to web scraping like going to different pages etc. I will cover them in the next part of this tutorial series .

Thanks for reading this long post! I hope it helped you understand Web Scraping a little better. If you liked this post, then please do give me a few 👏 . You are welcome to comment and ask anything!